I'm a big fan of the game Zendo. But I don't think it suits large groups very well. The more other players there are, the more time you spend sitting around; and you may well get only one turn. I also think a game tends to take longer with more players.

Here's an alternate ruleset you can use if you have a large group. I think I've played it 2-3 times, with 15-ish? players, and it's finished in about 30 minutes each time, including explaining the rules to people who'd never played Zendo before.

For people who know the typical rules, here's the diff from them:

-

After the initial two samples are given, players run experiments in real time, whenever they feel like they have one they want to run, and the universe marks them. There's no turn taking, and no need to wait for one player to finish their experiment before you start constructing yours. Just try to make it clear when you have finished.

-

At any time, a player can guess the rule. They announce clearly that they want to guess, and then play pauses until they're done. If they take too long, the universe should probably set a time limit, but I don't think this has been a problem.

-

If they guess correctly, they win. If not, they can no longer guess. The universe provides a counterexample, and play resumes.

-

I've never run out of players before someone guesses the rule. Two options would be "everyone gets their guess back" and "game over".

Another change (starting from the standard rules) that I think might speed games up, is the ability to spend multiple funding tokens to publish a paper out of turn. But I've only run this once, needing three tokens, and no one took advantage of it. Maybe I'll try with two at some point.

Posted on 23 September 2025

|

Comments

I've previously complained about how people often repeat a quote that starts with

The reason that the rich were so rich, Vimes reasoned, was because they managed to spend less money.

(Terry Pratchett, Men at Arms)

…and then don't seem to realize that the thing they're quoting is saying "rich people spend less money than poor people, and that's why they're rich". It seems to me that people interpret it as saying various different things, but rarely the thing it's quite obviously saying.

Here's an oversight in my previous complaint: I didn't look at Wikipedia. I picked up vibes from a few internet randos, but not the specific internet randos who edit the world's premier encyclopedia.

Part of what I want to point out here is "there's no consensus on what the term boots theory refers to". If I'm wrong about that, and just happened to read the wrong internet randos, it seems likely that Wikipedia will tell me what the consensus is. So let's take a look.

As I write, the most recent revision is from March 17 2025, and it tells us:

The Sam Vimes theory of socioeconomic unfairness, often called simply the boots theory, is an economic theory that people in poverty have to buy cheap and subpar products that need to be replaced repeatedly, proving more expensive in the long run than more expensive items.

Come to think of it, this is actually a stronger claim than what I called level 1 boots theory. I described that as "being rich enables you to spend less money on things", but this is a specific way that being rich enables you to spend less money on things. Maybe it is worth having a term for this distinct from "ghetto tax", which is what I suggested previously. (But that term shouldn't necessarily be "boots theory".)

So that's what Wikipedia says "boots theory" refers to. Let's follow its citations and see why Wikipedia says that.

Wikipedia:

In the Discworld series of novels by Terry Pratchett, Sam Vimes is the captain of the City Watch of the fictional city-state of Ankh-Morpork.[1][2]

This doesn't talk about boots theory at all, but following the citations anyway:

[1] is The Guardian, Terry Pratchett estate backs Jack Monroe's idea for 'Vimes Boots' poverty index. This is an article about someone named Jack Monroe creating a price index, intended as an alternative to CPI, and naming it the "Vimes Boots Index".

As a source for Wikipedia's claim, the relevant part of this is quoting Rhianna Pratchett, who says:

My father used his anger about inequality, classism, xenophobia and bigotry to help power the moral core of his work. One of his most famous lightning-rods for this was Commander Vimes of the Ankh-Morpork City Watch

I note pedantically that Commander is a different rank than Captain. (Vimes is promoted from Captain to Commander in Men at Arms.)

On the subject of boots theory, we have the original quote from Men at Arms, starting (as usual) with "the reason that the rich were so rich". Rihanna calls it a "musing on how expensive it is to be poor via the cost of boots".

We're not told how the index is calculated or how it relates to boots theory except that it's "named in honour" of it.

(Incidentally, Monroe's own Wikipedia page doesn't mention the Vimes Boots Index at all.)

[2] is an NYT financial advice column, I think? Spend the Money for the Good Boots, and Wear Them Forever. It mostly boils down to someone giving an example where buying an expensive thing now saved money in the long run compared to buying cheap things. He bought \$300 ski pants that have lasted 17 years and counting, and claims that if he'd bought \$50 ski pants instead, he'd have had to buy a new pair every year, spending over \$800.

Note, there's an important calculation that he skips. If he'd invested \$300 in the S&P 500 in 1999, this site claims it would have been worth \$762 nominal in 2016, or \$528 inflation-adjusted. So yes, if he has the numbers right, it seems like this was probably a good financial investment on his part.

Anyway. This article does back the cited claim (albeit without using the phrase "City Watch"). On the subject of boots theory, it includes the original quote (starting, as usual, with "the reason that the rich were so rich") and doesn't take it any further than "you can spend more money now to spend less money in total". The example it gives matches Wikipedia's definition of boots theory.

Back to Wikipedia:

In the 1993 novel Men at Arms, the second novel focusing on the City Watch through Vimes' perspective, Vimes muses on how expensive it is to be poor:[2][3]

We just discussed [2]. [3] is Gizmodo, Discworld's Famous 'Boots Theory' Is Putting a Spotlight on Poverty in the UK. This is another announcement of the Vimes Boots Index. It gives a little more detail on how it relates to boots theory:

Monroe drew parallels to the famous passage's explanation to how low-income families in the UK have seen supermarkets either noticeably increase prices on "budget" lines of basic food and goods (an example Monroe used cited an over 300% increase on a bag of rice at one store in the last year, while cutting the amount of rice in the bag in half) as the country faces the continuing economic effects of Brexit, the covid-19 pandemic, and general supply chain issues. With these lines, aimed at low-income households, either pricing up or being replaced with more expensive store-brand ranges, struggling families are being forced to turn to charities and food banks to sustain themselves, reckoning with over a decade of cuts to social support programs by Britain's series of Conservative governments, including Boris Johnson's current cabinet, itself rather busy right now trying to hide a long string of embarrassing, potentially illegal social gatherings by the Prime Minister during covid-19 lockdowns.

…except that I don't see much connection. But boy, impressive segue in that second sentence.

Wikipedia now gives the original quote, starting as usual with "the reason that the rich were so rich". This is citation 4, pointing at Men at Arms. Then:

In the New Statesman, Marc Burrows hypothesized Pratchett drew inspiration from Robert Tressell's 1914 novel The Ragged-Trousered Philanthropists.[5]

[5] is the New Statesman, Your best ally against injustice? Terry Pratchett. This is another piece talking about the Vimes Boots Index. It gives an extra hint about how it's calculated:

[Monroe has] been monitoring the true "cost of living" for over a decade – not the one the ONS espouses, based on the price of 700 pre-selected goods including (as Monroe wryly notes in their article in the Observer) "a leg of lamb, bedroom furniture, a television and champagne", but the ones familiar to those who have no choice but to spend the absolute minimum: value ranges, budget items and absolute basics. The ONS bases its statistics on average prices, but for millions of the country’s poorest, average prices don’t reflect reality at all.

On the subject of boots theory, we get the original quote, refreshingly not starting with "the reason that the rich were so rich" - it starts with "take boots, for example". As commentary, it says "being poor is actually more expensive than being wealthy".

(That sounds a lot like "poor people spend more money than rich people", but they're not quite the same. If I say "owning an Aston Martin is more expensive than owning a Ford", you probably assume that the cost of driving, maintaining and insuring a Martin in some minimal adequate condition is higher than doing that for a Ford. But some people will voluntarily spend more than the minimum, and some Ford owners will spend more on their cars than some Martin owners; and Ford owners may well spend more than Martin owners on non-car stuff. So it would be coherent to say something like: "being poor is more expensive than being rich, in the sense that poor people are forced to spend more money on daily living activities. But rich people typically spend more than they're forced to, and more than poor people overall, on daily living activities and other stuff." I'm not sure if I've previously realized this distinction.)

Wikipedia:

In the book Fashion in the Fairy Tale Tradition, Rebecca-Anne C. Do Rozario argued "shoes and economic autonomy are inexorably linked" in fairy tales, citing the Boots theory as "particularly relevant" and "an insightful metaphor for inequality".[6]

[6] is just a link to page 180 of that book. It doesn't have much more than what Wikipedia gives:

Shoes and economic autonomy are inexorably linked. Terry Pratchett, with his firm understanding of how fairy tales operate, places the "'Boots' theory of socio-economic unfairness" in the mind of Sam Vimes in Men at Arms (1993). It is an insightful metaphor for inequality articulated through shoe-wear: "A man who could afford fifty dollars had a pair of boots that'd still be keeping his feet dry in ten years' time, while a poor man who could only afford cheap boots would have spent a hundred dollars on boots in the same time and would still have wet feet."

This might be the shortest extract of the quote I've seen yet.

("Particularly relevant" seems to come from a footnote on page 213. I can locate it through search but there's no preview available for the page.)

Wikipedia:

In 2013, an article by the US ConsumerAffairs made reference to the theory in regard to purchasing items on credit, specifically regarding children's boots from the retailer Fingerhut; a \$25 pair of boots, at the interest rates being offered, would cost \$37 if purchased over seven months.[7]

This seems to switch the idea from "in the long run, repeatedly buying cheap things costs more than buying expensive things" to "buying things with money you don't hand over yet costs more than buying things with money you hand over immediately". Or, "people won't typically lend you money for free".

The article (ConsumerAffairs, Fingerhut boots and the Vimes' Boots paradox) even notices this leap. ("though we haven't tested this ourselves, we're sure that Carrini boots or Reebok sneakers bought from Fingerhut are just as good as Carrini or Reebok items bought elsewhere.)

We're given the original quote, starting as usual with "the reason that the rich were so rich".

Wikipedia:

In 2016, the left-wing blog Dorset Eye also ran an article discussing the theory, giving fuel poverty in the United Kingdom as an example of its application, citing a 2014 Office for National Statistics (ONS) report that those who pre-paid for electricity—who were most likely to be subject to fuel poverty—paid 8% more on their electricity bills than those who paid by direct debit.[8]

Dorset Eye, Mike Deverell discusses five reasons why those with less end up paying more.

Welp, uh, this is kind of a case where people will lend you money for free, if you have money. It fits a broad "being poor is expensive" narrative, but it's not a case where rich people buy a more expensive thing up front to save money in the long run.

Other than fuel poverty, the article gives four more reasons "why it's so difficult to climb out of the poverty trap": VAT, other taxes, food poverty and austerity. None seem to have any relation to boots theory. The article doesn't explicitly say they're examples of it, and Wikipedia doesn't mention them, so I'll leave them out.

We get the original quote, starting with "the reason that the rich were so rich".

Wikipedia:

In a 2020 discussion paper for the Social and Political Research Foundation, Sitara Srinivas used the theory to analyze how sustainable fashion is inaccessible compared to fast fashion.[9]

Stitching a new narrative: engaging with sustainability in the fashion industry. The relevant part is:

Because of the higher pay to workers and quality of materials used, sustainable fashion usually is more expensive. As previously stated, a fast fashion brand white t-shirt costs on average Rs 824. A sustainably produced t-shirt on the other hand costs on average Rs 4825[4]. For the average working or middle class consumer, who may not have the money to invest in a piece of quality clothing that will last long, but also at the same time has the aspiration to dress fashionably, sustainable fashion then becomes an unachievable goal.

The 'Boots theory of socio-economic unfairness' engages with this phenomenon. Derived from a paragraph in Terry Pratchett's novel Men at Arms, it explores the idea that quality costs more in the short term, with a purchase being more expensive at first, and the cost per use reducing over time. For someone that cannot afford a quality piece of clothing, cheap clothing becomes the best option. While clothing that is made sustainably may last longer, fast fashion remains cheaper and hence more accessible (Ellen MacArthur Foundation).

Followed by the original quote, starting as usual with "the reason that the rich were so rich".

So this broadly matches Wikipedia's description of the term. It doesn't come right out and say "buying the expensive t-shirt is cheaper in the long run". But it's at least hinting in that direction.

Wikipedia:

In an article titled "The Price of Poverty" published in Tribune Magazine in 2022, the theory was cited as explaining the economic predicament in the United Kingdom. Examples provided included the higher cost of renting compared to home ownership, higher interest rates for loans to impoverished people, the effects of food poverty on educational advancement, and healthcare costs.[10]

Tribune, The Price of Poverty. This one doesn't give the original quote at all, describing boots theory in its own words:

This obvious injustice—subsidies for businesses, cost hikes for workers—is only one example of the reality spread across our economic system: that being in poverty is much more expensive than being rich.

Vimes' 'Boots Theory of Socio-Economic Unfairness'

To understand why poverty is so expensive, we can look to Samuel Vimes, Captain of the City Watch and protagonist of Terry Pratchett's Discworld novel Men at Arms. Vimes uses his 'Boots theory of socio-economic unfairness' to outline how the nobility of his city, Ankh-Morpork, could live twice as comfortably as him while spending half the money.

Vimes earned 38 dollars per month as captain of the watch. A really good pair of boots, the kind that would last for around 10 years, would cost around 50 dollars—far more than his monthly salary. As a result, he would have to buy the cheaper, lower-quality boots for 10 dollars, which would last for one year at most. Over a 10-year period, Vimes would spend 100 dollars on 10 pairs of boots (and would still have wet feet), whereas the noble would have only spent 50 dollars on one pair of boots during the same period.

(I repeat that Vimes is wrong about the nobility - at minimum, about the specific nobility he's thinking of, who is the richest woman in the city and his fiancée - spending less money than him.)

Here are the examples the article gives:

subsidies for businesses, cost hikes for workers

Roughly speaking, I think the idea here is "rich people's cost of living is going down and poor people's is going up". But that's talking about acceleration, not velocity.

Housing costs for private tenants have jumped by nearly 50% above the general rate of inflation in the last 25 years. House prices have also soared, but home ownership remains, often, the cheaper option.

So this is "spending more money up front is cheaper in the long term". You can even kind of make it fit the original definition: when you rent, you repeatedly purchase "the right to live in this place for the next month", but when you buy, you purchase that right once and keep it indefinitely. And the rights you purchase when you rent are typically worse than the rights you purchase when you buy (e.g. more restrictive about pets, or decoration, or interior layout).

(Note: costs of home ownership here do take into account "interest lost by paying a deposit rather than saving.")

Borrowing costs are also much higher for poor people because they are seen by lenders as higher risk, meaning they pay more interest on debt like overdrafts and credit cards.

"Poor people get less favorable terms when borrowing money."

In 2021, a study by the Food Foundation found that the poorest fifth of British society would need to spend 40% of their income to reach the government’s healthy eating guidelines. The chronic malnutrition caused by poverty has long-term costs, both to individuals and to society as a whole.

"Poor people don't get enough food, and that causes them long term problems."

A 2016 study by the Joseph Rowntree Foundation found that healthcare spending accounted for the largest portion of additional spending associated with poverty, at £29 billion. … The British Medical Journal has warned that unless the government acts to address the Cost of Living crisis, poverty, and therefore health inequalities and the costs associated with treating and managing them, will increase.

"Poor people are less healthy, and more expensive for the government to provide healthcare to."

Remember that Wikipedia's definition is

The Sam Vimes theory of socioeconomic unfairness, often called simply the boots theory, is an economic theory that people in poverty have to buy cheap and subpar products that need to be replaced repeatedly, proving more expensive in the long run than more expensive items.

Of the citations, it seems like three back this up? One with an example about ski pants, one with an example about t-shirts, and one talking about home ownership versus renting.

Other than those, here are some things that citations relate to boots theory:

- You can spend more money now to spend less money in total. (More general than Wikipedia's definition.)

- Being poor is more expensive than being rich. (More general again.)

- Buying things with money you don't hand over yet costs more than buying things with money you hand over immediately.

- Except that sometimes it costs less.

- Poor people get less favorable terms when borrowing money.

- Costs of living are going down for rich people and up for poor people.

- Poor people don't get enough food, and that causes them long term problems.

- Poor people are less healthy, and more expensive for the government to provide healthcare to.

…and for some of these, Wikipedia even includes them inline. You don't need to click through to the citations to realize that multiple different concepts are getting confused.

(Someone in the talk page has noticed the same thing: "The instances listed here aren't really examples of the same mechanism.")

(Also, when I wrote the previous essays, multiple commentators told me what they take boots theory to mean, but none of them picked Wikipedia's definition of it.)

So I think I'm vindicated in my complaints. Wikipedia's definition of "boots theory" is not consensus, even among Wikipedia's sources. There is no consensus.

Posted on 01 August 2025

|

Comments

I previously wrote about Boots theory, the idea that "the rich are so rich because they spend less money". My one-sentence take is: I'm pretty sure rich people spend more money than poor people, and an observation can't be explained by a falsehood.

The popular explanation of the theory comes from Sam Vimes, a resident of Ankh-Morpork on the Discworld (which is carried on the backs of four elephants, who themselves stand on a giant turtle swimming through space). I claim that Sam Vimes doesn't have a solid understanding of 21st Century Earth Anglosphere economics, but we can hardly hold that against him. Maybe he understands Ankh-Morpork economics?

To be clear, this is beside the point of my previous essay. I was talking about 21st Century Earth Anglosphere because that's what I know; and whenever I see someone bring up boots theory, they're talking about Earth (usually 21st Century Anglosphere) and not Ankh-Morpork. But multiple commentors brought it up.

Radmonger:

you need to understand Vimes as making a distinction not between the upper class and everyone else, but the middle class and the working class, between homeowners and renters.

This is completely wrong.

Ericf:

Vimes is thinking of the landed gentry when he is considering the "rich" - that would be the top 1%, not the tippy-top super-rich. Also, in a pseudo-medivial environment, the lifestyle inequality isn't as extreme as today's 50th % vs 1%.

This is closer, but still wrong.

WTFwhatthehell:

The quote in the book is about old money families vs the poor.

JC O:

It should be noted that Vimes was specifically thinking of real generational wealth in that area. He'd spent some time in the home of a Lady from oldest and wealthiest family in the city, and saw that everything was old there, solid, built to last forever. Generations of clothing tailored to the fit of the family members, and saved if it was still in good condition, and if it was not, the fabric would be reused to make something else. Even the garden tools were old. The family owned multiple homes in various cities and in the country, and they owned sizable portions of the real estate in the city.

These are basically right. (I'm not sure about all of JC O's specific details, and I'd strike "vs the poor".) Here's the extended quote (from Men at Arms):

She was, Vimes had been told, the richest woman in Ankh-Morpork. In fact she was richer than all the other women in Ankh-Morpork rolled, if that were possible, into one.

It was going to be a strange wedding, people said. Vimes treated his social superiors with barely concealed distaste, because the women made his head ache and the men made his fists itch. And Sybil Ramkin was the last survivor of one of the oldest families in Ankh. But they'd been thrown together like twigs in a whirlpool, and had yielded to the inevitable …

When he was a little boy, Sam Vimes had thought that the very rich ate off gold plates and lived in marble houses.

He'd learned something new: the very very rich could afford to be poor. Sybil Ramkin lived in the kind of poverty that was only available to the very rich, a poverty approached from the other side. Women who were merely well-off saved up and bought dresses made of silk edged with lace and pearls, but Lady Ramkin was so rich she could afford to stomp around the place in rubber boots and a tweed skirt that had belonged to her mother. She was so rich she could afford to live on biscuits and cheese sandwiches. She was so rich she lived in three rooms in a thirty-four-roomed mansion; the rest of them were full of very expensive and very old furniture, covered in dust sheets.

The reason that the rich were so rich, Vimes reasoned, was because they managed to spend less money.

Take boots, for example. He earned thirty-eight dollars a month plus allowances. A really good pair of leather boots cost fifty dollars. But an affordable pair of boots, which were sort of okay for a season or two and then leaked like hell when the cardboard gave out, cost about ten dollars. Those were the kind of boots Vimes always bought, and wore until the soles were so thin that he could tell where he was in Ankh-Morpork on a foggy night by the feel of the cobbles.

But the thing was that good boots lasted for years and years. A man who could afford fifty dollars had a pair of boots that'd still be keeping his feet dry in ten years' time, while a poor man who could only afford cheap boots would have spent a hundred dollars on boots in the same time and would still have wet feet.

This was the Captain Samuel Vimes 'Boots' theory of socio-economic unfairness.

The point was that Sybil Ramkin hardly ever had to buy anything. The mansion was full of this big, solid furniture, bought by her ancestors. It never wore out. She had whole boxes full of jewellery which just seemed to have accumulated over the centuries. Vimes had seen a wine cellar that a regiment of speleologists could get so happily drunk in that they wouldn't mind that they'd got lost without trace.

Lady Sybil Ramkin lived quite comfortably from day to day by spending, Vimes estimated, about half as much as he did. But she spent a lot more on dragons.

So Vimes is specifically distinguishing between the tippy-top super-rich and the "merely well-off".

There's, um, a lot to unpack here. It's kind of fractally wrong.

Note that bits of this don't make sense when taken literally. "Lady Ramkin was so rich she could afford to stomp around the place in rubber boots and a tweed skirt that had belonged to her mother. She was so rich she could afford to live on biscuits and cheese sandwiches." These are not expensive things compared to the alternatives.

I think the subtext here is something like "Lady Ramkin is so rich she doesn't need to try to impress people". Looking back at Guards! Guards! (the first book featuring Vimes and Ramkin), that's spelled out more explicitly: "A couple of women were moving purposefully among the boxes. Ladies, rather. They were far too untidy to be mere women. No ordinary women would have dreamed of looking so scruffy; you needed the complete self-confidence that comes with knowing who your great-great-great-great-grandfather was before you could wear clothes like that."

I'm tempted to attribute this to personality (speculatively, "autism") rather than wealth. But I think it does rhyme with discussions of class that I've seen. (Roughly: rich people don't want you to mistake them for middle-class, so they visibly spend money. The people richer than them aren't in danger of being mistaken for middle-class, but they don't want you to think they're merely "rich", so they avoid visibly spending money.) Let's stipulate that the subtext is accurate, and move on.

The mention of wine is out of place. Rich people don't have access to durable wine that isn't depleted when drunk. If Sybil Ramkin has a lot of wine, it's not because someone made a relatively small up-front investment in wine and now there's no need to buy wine ever again. It's because someone spent a lot of money to buy a lot of wine, and it just hasn't all been drunk yet.

Next, note the contradiction here:

- Sybil Ramkin is rich because she doesn't spend much money;

- Sybil Ramkin doesn't spend much money except on dragons.

She does spend a lot of money! She spends it on dragons! So that can't be why she's rich! An observation can't be explained by a falsehood.

Could it be true, if it weren't for the dragons?

("It's wet here. Might that be from the sprinkler?" "There is no sprinkler, so no." "Okay. But if there were a sprinkler, might that be why it's wet?")

No: I'm pretty sure "Sybil Ramkin doesn't spend much money on things other than dragons" is also false. Here are some snippets from Guards! Guards!:

Vimes had completely forgotten the Watch House. "It must have been badly damaged," he ventured.

"Totally destroyed," said Lady Ramkin. "Just a patch of melted rock. So I'm letting you have a place in Pseudopolis Yard."

"Sorry?"

"Oh, my father had property all over the city," she said. "Quite useless to me, really. So I told my agent to give Sergeant Colon the keys to the old house in Pseudopolis Yard. It'll do it good to be aired."

Lady Ramkin's coach rattled into the plaza making a noise like a roulette wheel and pounded straight for Vimes, stopping in a skid that sent it juddering around in a semi-circle and forced the horses either to face the other way or plait their legs.

An effort had been made to spruce up the Ramkin mansion, he noticed. The encroaching shrubbery had been pitilessly hacked back. An elderly workman atop a ladder was nailing the stucco back on the walls while another, with a spade, was rather arbitrarily defining the line where the lawn ended and the old flower beds had begun.

To his amazement the door was eventually opened by a butler so elderly that he might have been resurrected by the knocking.

A terrible premonition took hold of Vimes at the same moment as a gust of Captivation, the most expensive perfume available anywhere in Ankh-Morpork blew past him.

Vimes was vaguely aware of a brilliant blue dress that sparkled in the candlelight, a mass of hair the colour of chestnuts, a slightly anxious face that suggested that a whole battalion of skilled painters and decorators had only just dismantled their scaffolding and gone home, and a faint creaking that said underneath it all mere corsetry was being subjected to the kind of tensions more usually found in the heart of large stars.

He must have eaten, because servants appeared out of nowhere with things stuffed with other things, and came back later and took the plates away. The butler reanimated occasionally to fill glass after glass with strange wines. The heat from the candles was enough to cook by.

Ignore that she gives away a building: that's a one-off. Stipulate that all those servants and the expensive make-up and perfume are just another one-off to seduce Vimes: that's at least partly supported in the text, she normally does her own cooking. Ignore for some reason the two workmen. Pretend that her clothes and furniture and property need no upkeep at all - including the fancy-rather-than-practical clothes she sometimes wears throughout the book. Assume she drives the coach herself: it's a little weird that that's not mentioned, but there's no explicit mention of a driver either.

Even granting all that, she still needs to feed at least two horses! Horses, I am led to believe, are expensive. (And need a certain amount of time and attention, and do you think Sybil Ramkin spends her time taking care of horses when there are dragons to take care of?)

Do we further assume she just rents the horses every time she needs to use her coach? She walks, herself, down to someone else's stables. Then brings two horses back to her house, where she hooks them up to her coach. (Could she keep the coach at the stables? Not for free.) She drives her coach around herself, and when her business is concluded, she drops the coach back at her house, returns the horses, and walks home.

And why does she do this? If she was struggling, she might do it to save money. But she's not. She has property worth about seven million dollars a year.

I'm not willing to credit that she does this. But even if I was, how much does it cost to rent two horses for a few hours? It can't be cheap, and she does that multiple times in the book.

Plus, even when she's not explicitly trying to seduce him, she feeds Vimes bacon, fried potatoes, eggs, cake and bread pudding. Does Vimes get to eat these things often? ("Vimes thought about the meals at his lodgings. Somehow the meat was always gray, with mysterious tubes in it.")

This is not a person who lives on less money than Sam Vimes, and it's hard to believe Sam Vimes - a policeman, someone for whom "noticing things" is part of the job description, who is engaged to this woman - is ignorant of the fact.

That's based on Guards! Guards! What if we completely ignore that book, and just look at Men at Arms?

Then he dried himself off as best he could and looked at the suit on the bed.

It had been made for him by the finest tailor in the city. Sybil Ramkin had a generous heart. She was a woman out for all she could give.

The suit was blue and deep purple, with lace on the wrists and at the throat. It was the height of fashion, he had been told.

Willikins had also laid out a dressing gown with brocade on the sleeves. He put it on, and wandered into his dressing room.

That was another new thing. The rich even had rooms for dressing in, and clothes to wear while you went into the dressing rooms to get dressed.

(Hardly "rubber boots and a tweed skirt that had belonged to her mother", or "three rooms in a thirty-four-roomed mansion".)

"His lordship … that is, her ladyship's father … he required to have his back scrubbed," said Willikins.

(i.e. the butler seems to be a regular fixture.)

And this wasn't one of the old hip bath, drag-it-in-front-of-the-fire jobs, no. The Ramkin mansion collected water off the roof into a big cistern, after straining out the pigeons, and then it was heated by an ancient geyser [footnote: "Who stoked the boiler."]

(i.e. that's another person who needs to be paid.)

Lady Sybil was devoting to her wedding all the directness of thought she'd normally apply to breeding out a tendency towards floppy ears in swamp dragons. Half a dozen cooks had been busy in the kitchens for three days. They were roasting a whole ox and doing amazing stuff with rare fruit.

(Sybil Ramkin is so rich she can afford to live on biscuits and cheese sandwiches. But sometimes she likes to slum it, and spend money as if she was merely well-off.)

Also, I didn't find a convenient quote for this, but she hosts a dinner for a bunch of other rich people and somehow I doubt she asks them to pay for it.

I can offer two defenses of Vimes here. One is that all of this is from from after the quote about boots theory, so - again, as long as we're pretending Guards! Guards! never happened - maybe he didn't know all this at the time, and then he'd still be embarrassingly wrong but a bit less embarrassingly wrong. Like, he'd be making up shit that defies common sense, and it's weird he knows so little about his fiancée, but he wouldn't be making up shit that contradicts what he's seen himself.

The stronger one is that it's revealed he gives away \$14/month to the widows and orphans of coppers. That increases the apparent spending power of his \$38/month. So when he thinks Sybil spends less than he does, she has more margin to do that in.

But still. C'mon.

Might Vimes have been right in general, but wrong about Sybil Ramkin?

I don't think we see much else of the very very rich. We're told Vimes doesn't either. (Men at Arms: "He hadn't had much experience with the rich and powerful.") If there's any reason for Vimes to think "the rich spend less money than the poor" is true in any sense in Ankh-Morpork, I don't know it.

So why does Vimes think those thoughts? I don't have a Watsonian explanation. One possible Doylist explanation is that Pratchett put it in the book because he wanted to comment on 20th Century Earth Anglosphere economics, and didn't notice or didn't care that it didn't make sense in context.

…but as someone who thinks it also doesn't make sense for 20th Century Earth Anglosphere economics, I think there's a slightly different explanation.

I think boots theory is a very foolish thing for Sam to believe of Ankh-Morpork, or for Pratchett to believe of Earth. But for whatever reason, lots of people do seem to believe it of Earth.

Or more accurately, lots of people interpret the description of boots theory as pointing at something they believe. I think they're wrong about some combination of "what those words mean" and "what is true" (and different people are wrong in different ways), but the point right now is that this is a thing lots of people do.

And if lots of people make a certain mistake about Earth, then it's also plausible that Pratchett made the same mistake about Ankh-Morpork, and inserted that mistake into Vimes' head. Or that Pratchett didn't make that mistake, but knew that lots of other people do, and deliberately inserted it into Vimes' head knowing it was a mistake.

So some possible theories are:

-

Pratchett, wanting to comment on Earth, had Vimes think thoughts about Earth but substituted in Ankh-Morpork. Pratchett didn't notice or didn't care that those thoughts were false when thought about Ankh-Morpork.

-

Pratchett had Vimes think untrue thoughts about Ankh-Morpork, that Pratchett thought were true about Ankh-Morpork. He thought that for the same reason that lots of people think the same thoughts would be true of Earth.

-

Pratchett had Vimes think untrue thoughts about Ankh-Morpork, that Pratchett knew were false about Ankh-Morpork; but Pratchett thought it was a believable mistake for Vimes to make, because Pratchett had seen people making the same mistake about Earth.

But ultimately I'll probably never know, and it doesn't really matter.

Posted on 18 March 2025

|

Comments

I sometimes see people describe the Elm community as "very active". For example:

- Elmcraft says "The

Elm community is very active."

- "Is Elm dead?" says "the community is more active than ever." (I guess that's compatible with "but still not very active", but, well, subtext.)

- Some /r/elm commenter says "The community is very active and productive." (They later clarify: "It's very active compared to similar projects. Tight focus, feature complete. People tend to compare it to dissimilar projects.")

Is this true? Let's get some statistics, including historical ones, and see what comes up.

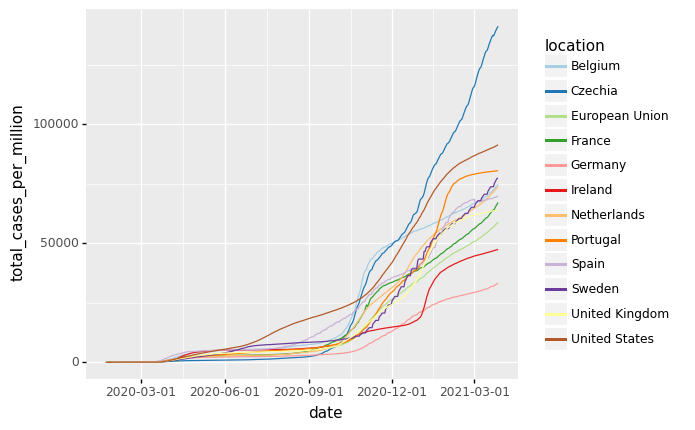

I think all this data was collected on October 28 2024. I'm splitting things out by year, so we can roughly extrapolate to the end of 2024 by multiplying numbers by 1.2.

Subreddit

I could export 951 posts from reddit. (That's a weird number; I suspect it means there were 49 posts I couldn't see.) The oldest were in December 2019, which means I have about 4⅚ years of data. In a given calendar year, I can easily count: how many posts were there? And how many comments were there, on posts made in that calendar year? (So a comment in January 2022 on a post from December 2021, would be counted for 2021.)

| Year |

Posts |

Comments |

| 2020 |

296 |

2156 |

| 2021 |

236 |

1339 |

| 2022 |

215 |

1074 |

| 2023 |

132 |

639 |

| 2024 (extrapolated) |

76 |

505 |

| 2024 (raw) |

63 |

421 |

By either measure, 2024 has about a quarter of 2020 activity levels.

Discourse

I got a list of every topic on the discourse, with its creation date, "last updated" date (probably date of last reply in most cases), number of replies and number of views. The first post was in November 2017.

| Year |

Posts (C) |

Replies (C) |

Views (C) |

Posts (U) |

Replies (U) |

Views (U) |

| 2017 |

46 |

366 |

90830 |

29 |

151 |

46081 |

| 2018 |

819 |

5273 |

1634539 |

817 |

5284 |

1617704 |

| 2019 |

685 |

4437 |

1113453 |

695 |

4488 |

1137001 |

| 2020 |

610 |

4104 |

882214 |

613 |

4201 |

908361 |

| 2021 |

482 |

3502 |

603199 |

485 |

3528 |

608075 |

| 2022 |

332 |

1698 |

336095 |

329 |

1548 |

319918 |

| 2023 |

294 |

1544 |

221806 |

298 |

1711 |

243834 |

| 2024 (extrapolated) |

224 |

1422 |

115503 |

227 |

1438 |

116898 |

| 2024 (raw) |

187 |

1185 |

96253 |

189 |

1198 |

97415 |

The "C" columns count according to a post's creation date, and the "U" columns count by "last updated" date.

So posts and replies have fallen to about 35% of 2020 levels. Views have fallen to about 15%.

Package releases

For every package listed on the repository, I got the release dates of all its versions. So we can also ask how frequently packages are getting released, and we can break it up into initial/major/minor/patch updates.

My understanding is: if a package has any version compatible with 0.19, then every version of that package is listed, including ones not compatible with 0.19. If not it's not listed at all. So numbers before 2019 are suspect (0.19 was released in August 2018).

| Year |

Total |

Initial |

Major |

Minor |

Patch |

| 2014 |

1 |

1 |

0 |

0 |

0 |

| 2015 |

130 |

24 |

28 |

31 |

47 |

| 2016 |

666 |

102 |

137 |

145 |

282 |

| 2017 |

852 |

170 |

147 |

208 |

327 |

| 2018 |

1897 |

320 |

432 |

337 |

808 |

| 2019 |

1846 |

343 |

321 |

373 |

809 |

| 2020 |

1669 |

288 |

343 |

366 |

672 |

| 2021 |

1703 |

225 |

385 |

359 |

734 |

| 2022 |

1235 |

175 |

277 |

289 |

494 |

| 2023 |

1016 |

155 |

223 |

255 |

383 |

| 2024 (extrapolated) |

866 |

137 |

167 |

204 |

359 |

| 2024 (raw) |

722 |

114 |

139 |

170 |

299 |

Package releases have declined by about half since 2020. Initial (0.48x) and major (0.49x) releases have gone down slightly faster than minor (0.56x) and patch (0.53x) ones, which might mean something or might just be noise.

Where else should we look?

The official community page gives a few places someone might try to get started. The discourse and subreddit are included. Looking at the others:

- The twitter has had two posts in 2024, both in May.

- Elm weekly, from a quick glance, seems to have had more-or-less weekly posts going back many years. Kudos!

- I once joined the slack but I don't know how to access it any more, so I can't see how active it is. Even if I could, I dunno if I could see how active it used to be.

- I don't feel like trying to figure out anything in depth from meetup. I briefly note that I'm given a list of "All Elm Meetup groups", which has six entries, of which exactly one appears to be an Elm meetup group.

- I'm not sure what this ElmBridge thing is.

For things not linked from there:

- I'm aware that Elm Camp has run twice now, in 2023 and 2024, and is planning 2025.

- There's an Elm online meetup which lately seems to meet for approximately four hours a year.

- There are nine other Elm meetups listed on that site. Most have had no meetups ever; none of them have had more than one in 2024.

- The Elm radio podcast hasn't released an episode in 2024. Elm town seems roughly monthly right now.

- hnhiring.com shows generally 0-1 Elm jobs/month since 2023. There was also a dry spell from April 2019 to November 2020, but it's clearly lower right now than in 2018, 2021 and 2022.

- Elmcraft is a website that I think is trying to be a community hub or something? Other than linking to Elm Weekly and the podcasts, it has a "featured article" (from April) and "latest videos" (most recently from 2023).

- How many long-form articles are being written about Elm? Not sure if there's an easy way to get stats on this.

- The elm tag on StackOverflow has had 6 questions asked this year, 22 in 2023. I couldn't be bothered to count further back.

- Github also lets you search for "repositories written partially in language, created between date1 and date2". So e.g. elm repos created in 2023. Quick summary: 2.6k in 2019; 2.3k in 2020; 1.7k in 2021; 1.5k in 2022; 1.2k in 2023; 731 so far in 2024 (extrapolate to 877).

My take

I do not think the Elm community is "very active" by most reasonable standards. For example:

- I think that if someone asks me for a "very active" programming language community they can join, and I point them at the Elm community, they will be disappointed.

- I think that if I were to look for an Elm job, I would struggle to find people who are hiring.

- I couldn't find a single Elm meetup that currently runs anywhere close to monthly, either online or anywhere in the world.

- It seems like I could read literally every comment and post written on the two main public-facing Elm forums, in about ten minutes a day. If I also wanted to read the long-form articles, when those are linked… my guess is still under twenty minutes?

If you think you have a reasonable standard by which the Elm community counts as "very active", by all means say so.

I think the idea that the Elm community is "more active than ever" is blatantly false. (That line was added in January 2022, at which point it might have been defensible. But it hasn't been removed in almost three years of shrinking, while the surrounding text has been edited multiple times.)

To be clear, none of this is intended to reflect on Elm as a language, or on the quality of its community, or anything else. I do have other frustrations, which I may or may not air at some point. But here I just intend to address the question: is it reasonable to describe the Elm community as "very active"?

(I do get the vibe that the Elm community could be reasonably described as "passionate", and that that can somewhat make up for a lack of activity. But it's not the same thing.)

Posted on 02 November 2024

|

Comments

Translated by Emily Wilson

1.

I didn't know what the Iliad was about. I thought it was the story of how Helen of Troy gets kidnapped, triggering the Trojan war, which lasts a long time and eventually gets settled with a wooden horse.

Instead it's just a few days, nine years into that war. The Greeks are camped on the shores near Troy. Agamemnon, King of the Greeks, refuses to return a kidnapped woman to her father for ransom. (Lots of women get kidnapped.) Apollo smites the Greeks with arrows which are plague, and after a while the other Greeks get annoyed enough to tell Agamemnon off. Achilles is most vocal, so Agamemnon returns that woman but takes one of Achilles' kidnapped women instead.

Achilles gets upset and decides to stop fighting for Agamemnon. He prays to his mother, a goddess, wanting the Greeks to suffer ruin without him. She talks to Zeus, who arranges the whole thing, though Hera (his wife and sister) and Athena aren't happy about it because they really want Troy sacked.

So Zeus sends a dream to Agamemnon, telling him to attack Troy. Agamemnon decides to test the Greek fighters, telling them it's time to give up and sail home. So they start running back to the ships, but Athena tells Odysseus to stop them, which he does mostly by telling them to obey orders.

There's a bunch of fighting between Greeks and Trojans, and bickering among the gods, and occasionally mortals even fight gods. In the middle of it there's a one-day truce, which the Greeks use to build a wall, complete with gates. Poseidon says it's such a good wall that people will forget the wall of Troy, which upsets him because they didn't get the gods' blessing to build it. Agamemnon tries to convince Achilles to fight again by offering massive rewards, including the woman he stole earlier, whom he swears he has not had sex with as is the normal way between men and women.

Eventually the Trojans fight past the wall and reach the Greek fleet. At this point Patroclus, Achilles' bff (not explicitly lover, but people have been shipping them for thousands of years), puts on Achilles' armor and goes to fight. He's skilled and scary enough that the Trojans flee. Achilles told him not to chase them, but he does so anyway, and he's killed by Hector. Hector takes the weapons and armor, and there's a big fight for his body, but the Greeks manage to drag it back to their camp.

When Achilles finds out he's pissed. The god Hephaestus makes him a new set of weapons and armor, Agamemnon gives him the massive reward from earlier, and he goes on a killing spree. He briefly gets bored of that and stops to capture twelve Trojan kids, but quickly resumes. Eventually all the Trojans except Hector flee into Troy. He kills Hector and drags the body around the city then back to camp. There's a funeral for Patroclus, which includes killing those twelve kids, but then every morning he just keeps dragging Hector's body around Patroclus' pyre. The gods don't let it get damaged, but this is still extremely poor form.

Eventually the gods get Hector's father Priam to go talk to him. Hermes disguises himself as a super hot Greek soldier to guide Priam to Achilles' tent. Priam begs and also offers a large ransom, and Achilles returns the body and gives them twelve days for a funeral before the fighting restarts. Most of this is gathering firewood.

2.

Some excerpts.

Diomedes meets Glaucus on the battlefield, and asks his backstory to make sure he's not a god. Glaucus tells the story of his grandfather Bellerophon, and Diomedes realizes that his own grandfather had been friends with Bellerophon:

The master of the war cry, Diomedes,

was glad to hear these words. He fixed his spear

firm in the earth that feeds the world, and spoke

in friendship to the shepherd of the people.

"So you must be an old guest-friend of mine,

through our forefathers. Oeneus once hosted

noble Bellerophon inside his house

and kept him as his guest for twenty days.

They gave each other splendid gifts of friendship.

Oeneus gave a shining belt, adorned

with purple, and Bellerophon gave him

a double-handled golden cup. I left it

back home inside my house when I came here.

But I do not remember Tydeus.

He left me when I was a tiny baby,

during the war at Thebes when Greeks were dying.

Now you and I are also loving guest-friends,

and I will visit you one day in Argos,

and you will come to visit me in Lycia,

whenever I arrive back home again.

So let us now avoid each other's spears,

even amid the thickest battle scrum.

Plenty of Trojans and their famous allies

are left for me to slaughter, when a god

or my quick feet enable me to catch them.

And you have many other Greeks to kill

whenever you are able. Let us now

exchange our arms and armor with each other,

so other men will know that we are proud

to be each other's guest-friends through our fathers."

With this, the two jumped off their chariots

and grasped each other's hands and swore the oath.

Then Zeus robbed Glaucus of his wits. He traded

his armor with the son of Tydeus,

and gave up gold for bronze—gold armor, worth

a hundred oxen, for a set worth nine.

At one point Hera dolls herself up to make Zeus want to sleep with her. This is the flirting game of Zeus, greatest of all the gods:

"You can go later on that journey, Hera,

but now let us enjoy some time in bed.

Let us make love. Such strong desire has never

suffused my senses or subdued my heart

for any goddess or for any woman

as I feel now for you. Not even when

I lusted for the wife of Ixion,

and got her pregnant with Pirithous,

a councillor as wise as any god.

Not even when I wanted Danae,

the daughter of Acrisius, a woman

with pretty ankles, and I got her pregnant

with Perseus, the best of warriors.

Not even when I lusted for the famous

Europa, child of Phoenix, and I fathered

Minos on her, and godlike Rhadamanthus.

Not even when I wanted Semele,

or when in Thebes I lusted for Alcmene,

who birthed heroic Heracles, my son—

and Semele gave birth to Dionysus,

the joy of mortals. And not even when

I lusted for the goddess, Queen Demeter,

who has such beautiful, well-braided hair—

not even when I wanted famous Leto,

not even when I wanted you yourself—

I never wanted anyone before

as much as I want you right now. Such sweet

desire for you has taken hold of me."

3.

It feels kinda blasphemous to say, but by modern standards, I don't think the Iliad is very good. Sorry Homer.

Not that there's nothing to like. I enjoyed some turns of phrase, though I don't remember them now. And it was the kind of not-very-good that still left me interested enough to keep listening. I'm sure there's a bunch of other good stuff to say about it too.

But also a lot that I'd criticize, mostly on a technical level. The "what actually happens" of it is fine, but doesn't particularly stand out. But the writing quality is frequently bad.

A lot of the fighting was of the form: Diomedes stabbed (name) in the chest. He was from (town) and had once (random piece of backstory), and he died. (Name) was killed by Diomedes, who stabbed him in the throat; his wife and children would never see him again. Then Diomedes stabbed (name) in the thigh, …

So I got basically no sense of what it was like to be on the battlefield. How large was it? How closely packed are people during fighting? How long does it take to strip someone of their armor and why isn't it a virtual guarantee that someone else will stab you while you do? The logistics of the war are a mystery to me too: how many Greeks are there and where do they get all their food? We're told how many ships each of the commanders brought, but how many soldiers and how many servants to a ship?

I also had very little sense of time, distance, or the motivations or powers of the gods.

There were long lists that bored me, and sometimes Homer seemed to be getting paid by the word—lots of passages were repeated verbatim, and a few repeated again. (Agamemnon dictates the reward he'll offer Achilles to a messenger, then the messenger passes it on to Achilles, and we're told it again after Patroclus' death.)

We're often told that some characters are the best at something or other, but given little reason to believe it. Notably, Hector is supposedly the most fearsome Trojan fighter, but he doesn't live up to his reputation. He almost loses one-on-one against Ajax before the gods intervene to stop the battle; he gets badly injured fighting Ajax again; and even after being healed, he only takes down Patroclus after Patroclus has been badly wounded by someone else. And Achilles is supposed to be the fastest runner, but when he chases after Hector, he doesn't catch up until Hector stops running away. Lots of people are described as "favored by Zeus" but Zeus doesn't seem to do jack for them.

Even when the narrative supports the narrator, it feels cheap. Achilles is supposedly the best Greek fighter, and when he fights, he wins. So that fits. But how did he become so good at it? Did he train harder? Does he have some secret technique? Supernatural strength? We're not told. (His invulnerability-except-heel isn't a part of this story, and half of everyone is the son of some god or goddess so that's no advantage to him. To be fair: a reader points out that Superman stories don't necessarily give his backstory either.)

The poem is in iambic pentameter, with occasional deviations at the beginnings and ends of lines. I guess that's technically impressive, but I mostly didn't notice. I also didn't notice any pattern in where the line breaks occur, so the "pentameter" part of it seems mostly irrelevant. If it had been unmetered, I don't think I would have enjoyed it noticeably less.

Is this just my personal tastes, such that other people would really enjoy the book? I dunno, probably at least a bit, and I've spoken to at least one person who said he did really enjoy it. Still, my honest guess is that if the Iliad was published for the first time today, it wouldn't be especially well received.

If it's not just me, is the problem that writers today are better at writing than writers a long time ago? Or that they're operating under different constraints? (The Iliad was originally memorized, and meter and repetition would have helped with that.) Or do readers today just have different tastes than readers a long time ago? I don't know which of these is most true. I lean towards "writers are better" but I don't really want to try making the argument. I don't think it matters much, but feel free to replace "the Iliad isn't very good by modern standards" with "the Iliad doesn't suit modern tastes" or "isn't well optimized for today's media ecosystem".

And how much is the original versus the translation, or even the narrator of the audiobook? I still don't know, but the translation is highly praised and the narration seemed fine, so I lean towards blaming the original.

4.

What is up with classics?

Like, what makes something a classic? Why do we keep reading them? Why did it feel vaguely blasphemous for me to say the Iliad isn't very good?

I'm probably not being original here, but….

I think one thing often going on is that classics will be winners of some kind of iterated Keynesian beauty contest. A Keynesian beauty contest is one where judges have incentive to vote, not for the person they think is most beautiful, but the person they think the other judges will vote for. Do a few rounds of those and you'll probably converge on a clear winner; but if you started over from scratch, maybe you'd get a different clear winner.

(I vaguely recall hearing about experiments along the lines of: set up some kind of Spotify-like system where users can see what's popular, and run with a few different isolated sets of users. In each group you get clear favorites, but the favorites aren't the same. If true, this points in the direction of IKBC dynamics being important.)

But what contests are getting run?

A cynical answer is that it's all signaling. We read something because all the educated people read it, so it's what all the educated people read. I'm not cynical enough to think this is the whole story behind classics, or even most of it, but it sure seems like it must be going on at least a little bit.

(Of course we're not going to get a whole crowd of admirers to admit "there's nothing here really, we just pretend to like this to seem sophisticated". So even if this is most of the story, we might not expect to find a clear example.)

To the extent that this is going on, then we'd expect me to be scared to criticize the Iliad because that exposes me as uneducated. Or we might expect me to criticize it extra harshly to show how independent-minded I am. Or both! And in fact I don't fully trust myself to have judged it neutrally, without regard to its status.

My guess: this is why some people read the Iliad, but it's not the main thing that makes it a classic.

Less cynically, we might observe that classics are often referenced, and we want to get the references. I don't think this could make something a classic in the first place, but it might help to cement its status.

In fact, it's not a coincidence that I listened to the Iliad soon after reading Terra Ignota. I don't really guess this is a large part of why others read it, but maybe.

(Also not a coincidence that I read A Midsummer Night's Dream soon after watching Get Over It. Reading the source material radically changed my opinion of that film. It went from "this is pretty fun, worth watching" to "this is excellent, one of my favorite movies". Similarly, See How They Run was improved by having recently seen The Mousetrap.)

A closely related thing here is that because lots of other people have read the classics, they've also written about the classics and about reading the classics. So if you enjoy reading commentary on the thing you've just read, the classics have you covered.

Some things might be classics because they're just plain good. There was a lot of crap published around the same time, and most of it has rightly been forgotten, but some was great even by the standards of today.

Like, maybe if you published Pride and Prejudice today, it would be received as "ah yes, this is an excellent entry in the niche genre of Regency-era romance. The few hundred committed fans of that genre will be very excited, and people who dabble in it will be well-advised to pick this one out".

But as I said above, I don't think the Iliad meets that bar.

I would guess the big thing for the Iliad is that it's a window into the past. Someone might read Frankenstein because they're interested in early science fiction, or because they want to learn something about that period in time. And someone might read the Iliad because they're interested in early literature or ancient Greece.

(And this might explain why classics often seem to be found in the non-fiction section of the library or bookstore. It's not that they're not fiction, but they're not being read as fiction.)

5.

The trouble is, that needs a lot of context. And I don't know enough about ancient Greece to learn much from the Iliad. I did learn a bit—like, apparently they ate the animals they sacrificed! I guessed that that was authentic, and it was news to me.

But when Priam insults his remaining sons, is that a normal thing for him to do or are we meant to learn from this that Priam is unusually deep in grief or something?

Do slaves normally weep for their captors, or does that tell us that Patroclus was unusually kind to them?

When Diomedes gets injured stripping someone of his armor, did that happen often? Is Homer saying "guys this macho bullshit is kinda dumb, kill all the other guys first and then take the armor"?

When Nestor says "no man can change the mind of Zeus", and then shortly afterwards Agamemnon prays to Zeus and Zeus changes his mind, I can't tell if that's deliberate irony or bad writing or what.

I don't even know if the early listeners of the Iliad thought these stories were true, or embellishments on actual events, or completely fabricated.

Consider Boston Legal, which ran from 2004-2008, because that happens to be the show I'm watching currently. I think I understand what the writers were going for.

The show asks the question "what if there were lawyers like Alan Shore and Denny Crane". It doesn't ask the question "what if there were lawyers"—we're expected to take that profession as a background fact, to know that there are real-life lawyers whose antics loosely inspired the show. We're not supposed to wonder "why are people dressed in those silly clothes and why don't they just solve their problems by shooting each other". (Well, Denny does sometimes solve and/or cause his problems by shooting people, but.)

It explores (not very deeply) the conflicts between professional ethics and doing the right thing, and I think that works best if we understand that the professional ethics on the show aren't something the writers just made up. I'm not sure how closely they resemble actual lawyerly professional ethics, but I think they're a good match for the public perception of lawyerly professional ethics.

It shows us a deep friendship between a Democrat and a Republican, assuming we get the context that such things are kinda rare. When Denny insists that he's not gay, that marks him as mildly homophobic but not unusually so. When they have (sympathetic!) episodes on cryonics and polyamory, we're expected to know that those things are not widely practiced in society; the characters' reactions to them are meant to read as normal, not as "these characters are bigoted".

(Boston Legal also has a lot of sexual harassment, and honestly I don't know what's up with that.)

One way to think of fiction is that it's about things that might happen, that might also happen differently. It's not just significant "what happened" but "what didn't happen; what was the space of possibilities that the actual outcome was drawn from". In Boston Legal I know a lot of ways things might turn out, which means when I'm watching I can make guesses about what's going to happen. And when it does happen, I can be like "okay yeah, that tracks"—even if I hadn't thought of it myself, I can see that it fits, and I can understand why the writers might have chosen that one. In the Iliad I don't know the space of possibilities, so when something happens I'm like "okay, I guess?"

Or: fiction is in the things we're shown, and the things we're not shown even though they (fictionally) happened, and the things we're not shown because they didn't happen. Why one thing is shown and another is unshown and another doesn't happen is sometimes a marked choice and sometimes unmarked. (That is, sometimes "the writers did this for a specific reason and we can talk about why" and sometimes "this was just kind of arbitrary or default".) And if you can't read the marks you're going to be very confused.

-

Shown, unmarked: the lawyers are wearing suits. Marked: Edwin Poole isn't wearing pants.

-

Not-shown, unmarked: people brush their teeth. Marked: an episode ends when the characters are waiting for an extremely time-sensitive call to be answered, and we just never learn what happened.

-

Doesn't happen, unmarked: someone turns into a pig in the courtroom. Marked: someone reports a colleague to the bar for violating professional ethics. (At least not as far as I've watched.)

I can't read the marks in the Iliad. I don't know what parts of what it shows are "this is how stuff just is, in Homer's world" and what parts of it are "Homer showing the audience something unusual". That makes it difficult for me to learn about ancient Greece, and also makes it difficult to know what story Homer is telling.

I listened to the audiobook of the Iliad because I happened to know that it was originally listened-to, not read, so it seemed closer to the intended experience. But in hindsight, I can't get the intended experience and I shouldn't have tried.

I think to get the most out of the Iliad, I should have read it along with copious historical notes, and deliberately treated it as "learning about ancient Greece" at least as much as "reading a fun story".

Originally submitted to the ACX book review contest 2024; not a finalist. Thanks to Linda Linsefors and Janice Roeloffs for comments.

Posted on 18 June 2024

|

Comments

Quick note about a thing I didn't properly realize until recently. I don't know how important it is in practice.

tl;dr: Conditional prediction markets tell you "in worlds where thing happens, does other-thing happen?" They don't tell you "if I make thing happen, will other-thing happen?"

Suppose you have a conditional prediction market like: "if Biden passes the DRESS-WELL act, will at least 100,000 Americans buy a pair of Crocs in 2025?" Let's say it's at 10%, and assume it's well calibrated (ignoring problems of liquidity and time value of money and so on).

Let's even say we have a pair of them: "if Biden doesn't pass the DRESS-WELL act, will at least 100,000 Americans buy a pair of Crocs in 2025?" This is at 5%.

This means that worlds where Biden passes the DRESS-WELL act have a 5pp higher probability of the many-Crocs event than worlds where he doesn't. (That's 5 percentage points, which in this case is a 100% higher probability. I wish we had a symbol for percentage points.)

It does not mean that Biden passing the DRESS-WELL act will increase the probability of the many-Crocs event by 5pp.

I think that the usual notation is: prediction markets tell us

\[ P(\text{many-Crocs}\, | \,\text{DRESS-WELL}) = 10\% \]

but they don't tell us

\[ P(\text{many-Crocs}\, | \mathop{do}(\text{DRESS-WELL})) = \, ?\% \]

One possibility is that "Biden passing the DRESS-WELL act" might be correlated with the event, but not causally upstream of it. Maybe the act has no impact at all; but he'll only pass it if we get early signs that Crocs sales are booming. That suggests a causal model

\[ \text{early-sales}

→ \text{many-Crocs}

→ \text{DRESS-WELL}

← \text{early-sales}

\]

with

\[ P(\text{many-Crocs}\, | \mathop{do}(\text{DRESS-WELL}))

= P(\text{many-Crocs})

\]

(I don't know if I'm using causal diagrams right. Also, those two "early-sales"es are meant to be the same thing but I don't know how to draw that.)

But here's the thing that triggered me to write this post. We can still get the same problem if the intervention is upstream of the event. Perhaps Biden will pass the DRESS-WELL act if he thinks it will have a large effect, and not otherwise. Let's say the act has a 50% chance of increasing the probability by 3pp and a 50% chance of increasing it by 5pp. Biden can commission a study to find out which it is, and he'll only pass the act if it's 5pp. Then we have

\[ \text{size-of-impact}

→ \text{many-Crocs}

← \text{DRESS-WELL}

← \text{size-of-impact} \\

P(\text{many-Crocs}\, | \mathop{do}(\text{DRESS-WELL})) = \, 9\%

\]

I expect that sometimes you want to know the thing that prediction markets tell you, and sometimes you want to know the other thing. Good to know what they're telling you, whether or not it's what you want to know.

Some other more-or-less fictional examples:

- If Disney sues Apple for copyright infringement, will they win? A high probability might mean that Disney has a strong case, or it might mean that Disney will only sue if they decide they have a strong case.

- If the Federal Reserve raises interest rates, will inflation stay below 4%? A high probability might mean that raising interest rates reliably decreases inflation; or it might mean that the Fed won't raise them except in the unusual case that they'll decrease inflation.

- If I go on a first date with this person, will I go on a second? A high probability might mean we're likely to be compatible; or it might mean she's very selective about who she goes on first dates with.

Posted on 03 April 2024

|

Comments

Mostly out of curiosity, I've been looking into how cryptocurrency is taxed in the UK. It's not easy to get what I consider to be a full answer, but here's my current understanding, as far as I felt like looking into it. HMRC's internal cryptoassets manual is available but I didn't feel like reading it all, and some of it seems out of date (e.g. page CRYPTO22110 seems to have been written while Ethereum was in the process of transitioning from proof-of-work to proof-of-stake). I also have no particular reason to trust or distrust the non-government sources I use here. I am not any form of accountant and it would be surprising if I don't get anything wrong.

My impression is HMRC tends to be pretty tolerant of people making good faith mistakes? In that if they audit you and you underpaid, they'll make you pay what you owe but you won't get in any other trouble. Maybe they'd consider "I followed the advice of some blogger who explicitly said he wasn't an accountant" to be a good faith mistake? I dunno, but if you follow my advice and get audited, I'd love to hear what the outcome is.

After I published, reddit user ec265 pointed me at another article that seems more thorough than this one. I wouldn't have bothered writing this if I'd found that sooner. I didn't spot anywhere where it disagrees with me, which is good.

Capital gains tax

Very loosely speaking, capital gains is when you buy something, wait a bit, and then sell it for a different price than you bought it for. You have an allowance which in 2023-24 is £6,000, so you only pay on any gains you have above that. The rate is 10% or 20% depending on your income.

But with crypto, you might buy on multiple occasions, then sell only some of what you bought. Which specific coins did you sell? There's no fact of the matter. But the law has an opinion.

Crypto works like stocks here. For stocks HMRC explains how it works in a document titled HS283 Shares and Capital Gains Tax (2023), and there's also manual page CRYPTO22200 which agrees.

The rule is that when you sell coins in a particular currency, you sell them in the following order:

- Any coins you bought that day;

- Any coins you bought in the following 30 days;

- Any coins you bought previously, averaged together as if you'd bought them all for the same price.

The "30 following days" thing is called the "bed and breakfasting" rule, and the point is to avoid wash sales where you try to deliberately pull forward a loss you haven't incurred yet incurred for tax purposes. Wikipedia says "Wash sale rules don't apply when stock is sold at a profit", but that doesn't seem to be true in the UK. The rule applies regardless of if you'd be otherwise selling for profit or loss.

The third bucket is called a "section 104 holding". Every time you buy coins, if they don't offset something in one of the other buckets, they go in a big pool together. You need to track the average purchase price of the coins in that pool, and when you sell, you take the purchase price to be that average. Selling doesn't affect the average purchase price of the bucket.

If there are transaction fees, they count towards the purchase price (i.e. increase the average price in the bucket) and against the sale price (i.e. decrease the profit you made). This detail isn't in HS283, but it's in a separately linked "example 3".

So suppose that at various (sufficiently distant) points in time, I

- buy 0.1 BTC for £100;

- buy 0.1 BTC for £110;

- sell 0.15 BTC for £200;

- buy 0.1 BTC for £300;

- sell 0.15 BTC for £50;

and each of these had £5 in transaction fees.

Then my section 104 holding contains:

- Initially empty.

- Then, 0.1 BTC purchased at a total of £105, average £1050/BTC.

- Then, 0.2 BTC purchased at a total of £220, average £1100/BTC.

- Then, 0.05 BTC purchased at a total of £55, average £1100/BTC.

- Here I sold 0.15 BTC purchased at a total of £165, and I sold them for £195 after fees, so that's £30 profit.

- Then, 0.15 BTC purchased at a total of £360, average £2400/BTC.

- Then, 0 BTC purchased at a total of £0, average meaningless.

- Here I sold 0.15 BTC purchased at a total of £360, and I sold them for £45 after fees, so that's £315 loss.

For the same-day bucket, all buys get grouped together and all sells get grouped together. For the 30-day bucket, you match transactions one at a time, the earliest buy against the earliest sell. (Unclear if you get to group them by day; I don't see anything saying you do, but if you don't then interactions with the same-day rule get weird.)

So for example, suppose the middle three events above all happened on the same day. In that case, it would work out as:

- My section 104 holding is initially empty.

- Then, it contains 0.1 BTC purchased at a total of £105, average £1050/BTC.

- Then we have three things happening on the same day.

- Grouping buys together, I buy 0.2 BTC for £420, average £2100/BTC.

- I sell 0.15 BTC from that bucket, which I bought for £315.

- Sale price is £195 so that's a loss of £120.

- The bucket now contains 0.05 BTC bought for £105, average £2100/BTC.

- That bucket enters my section 104 holding. This now contains 0.15 BTC purchased at a total of £210, average £1400/BTC.

- I sell my remaining BTC for £45, which is a loss of £165.

And if the middle three all happened within 30 days of each other, then:

- My section 104 holding is initially empty.

- Then, it contains 0.1 BTC purchased at a total of £105, average £1050/BTC.

- Then, 0.2 BTC purchased at a total of £220, average £1100/BTC.

- The subsequent buy and sell get matched:

- I buy 0.1 BTC for £305 and sell it for £130, making a loss of £175.

- I also sell 0.05 BTC for £65, that I'd bought at £55, making a profit of £10.

- So in total that sale makes me a loss of £165, and the 30-day bucket contains -0.05 BTC purchased at £55.

- That bucket enters my section 104 holding. This now contains 0.15 BTC purchased at a total of £165, average £1100/BTC.

- I sell my remaining BTC for £45, which is a loss of £120.

In all cases my total loss is £285, which makes sense. But I might get taxed differently, if this happened over multiple tax years.

Some more edge cases:

- I have no idea how these rules would apply if you're playing with options or short selling. I think those are both things you can do with crypto?

- If you receive crypto as a gift, you count it as coming in at market price on the day you recieved it. I'm not sure exactly how that's meant to be calculated (on any given day, lots of buys and sells happened for lots of different prices on various different legible exchanges; and lots also happened outside of legible exchanges) but I assume if you google "historical bitcoin prices" and use a number you find there you're probably good. So it's as if you were gifted cash and used it to buy crypto.

- Similarly, if you give it away as a gift, it's treated as disposing of it at market price on the day, as if you'd sold it for cash and gifted the cash.

- I think in both the above cases, if you buy or sell below market price as a favor (to yourself or the seller respectively) you still have to consider market price.